#include <llama_node.hpp>

|

| | LlamaNode () |

| |

| rclcpp_lifecycle::node_interfaces::LifecycleNodeInterface::CallbackReturn | on_configure (const rclcpp_lifecycle::State &) |

| |

| rclcpp_lifecycle::node_interfaces::LifecycleNodeInterface::CallbackReturn | on_activate (const rclcpp_lifecycle::State &) |

| |

| rclcpp_lifecycle::node_interfaces::LifecycleNodeInterface::CallbackReturn | on_deactivate (const rclcpp_lifecycle::State &) |

| |

| rclcpp_lifecycle::node_interfaces::LifecycleNodeInterface::CallbackReturn | on_cleanup (const rclcpp_lifecycle::State &) |

| |

| rclcpp_lifecycle::node_interfaces::LifecycleNodeInterface::CallbackReturn | on_shutdown (const rclcpp_lifecycle::State &) |

| |

|

| void | get_metadata_service_callback (const std::shared_ptr< llama_msgs::srv::GetMetadata::Request > request, std::shared_ptr< llama_msgs::srv::GetMetadata::Response > response) |

| |

| void | tokenize_service_callback (const std::shared_ptr< llama_msgs::srv::Tokenize::Request > request, std::shared_ptr< llama_msgs::srv::Tokenize::Response > response) |

| |

| void | detokenize_service_callback (const std::shared_ptr< llama_msgs::srv::Detokenize::Request > request, std::shared_ptr< llama_msgs::srv::Detokenize::Response > response) |

| |

| void | generate_embeddings_service_callback (const std::shared_ptr< llama_msgs::srv::GenerateEmbeddings::Request > request, std::shared_ptr< llama_msgs::srv::GenerateEmbeddings::Response > response) |

| |

| void | rerank_documents_service_callback (const std::shared_ptr< llama_msgs::srv::RerankDocuments::Request > request, std::shared_ptr< llama_msgs::srv::RerankDocuments::Response > response) |

| |

| void | format_chat_service_callback (const std::shared_ptr< llama_msgs::srv::FormatChatMessages::Request > request, std::shared_ptr< llama_msgs::srv::FormatChatMessages::Response > response) |

| |

| void | list_loras_service_callback (const std::shared_ptr< llama_msgs::srv::ListLoRAs::Request > request, std::shared_ptr< llama_msgs::srv::ListLoRAs::Response > response) |

| |

| void | update_loras_service_callback (const std::shared_ptr< llama_msgs::srv::UpdateLoRAs::Request > request, std::shared_ptr< llama_msgs::srv::UpdateLoRAs::Response > response) |

| |

| rclcpp_action::GoalResponse | handle_goal (const rclcpp_action::GoalUUID &uuid, std::shared_ptr< const GenerateResponse::Goal > goal) |

| |

| rclcpp_action::CancelResponse | handle_cancel (const std::shared_ptr< GoalHandleGenerateResponse > goal_handle) |

| |

| void | handle_accepted (const std::shared_ptr< GoalHandleGenerateResponse > goal_handle) |

| |

◆ GenerateResponse

◆ GoalHandleGenerateResponse

Initial value:

rclcpp_action::ServerGoalHandle<GenerateResponse>

◆ LlamaNode()

◆ create_llama()

| void LlamaNode::create_llama |

( |

| ) |

|

|

protectedvirtual |

◆ destroy_llama()

| void LlamaNode::destroy_llama |

( |

| ) |

|

|

protected |

◆ detokenize_service_callback()

| void LlamaNode::detokenize_service_callback |

( |

const std::shared_ptr< llama_msgs::srv::Detokenize::Request > | request, |

|

|

std::shared_ptr< llama_msgs::srv::Detokenize::Response > | response ) |

|

private |

◆ execute()

◆ format_chat_service_callback()

| void LlamaNode::format_chat_service_callback |

( |

const std::shared_ptr< llama_msgs::srv::FormatChatMessages::Request > | request, |

|

|

std::shared_ptr< llama_msgs::srv::FormatChatMessages::Response > | response ) |

|

private |

◆ generate_embeddings_service_callback()

| void LlamaNode::generate_embeddings_service_callback |

( |

const std::shared_ptr< llama_msgs::srv::GenerateEmbeddings::Request > | request, |

|

|

std::shared_ptr< llama_msgs::srv::GenerateEmbeddings::Response > | response ) |

|

private |

◆ get_metadata_service_callback()

| void LlamaNode::get_metadata_service_callback |

( |

const std::shared_ptr< llama_msgs::srv::GetMetadata::Request > | request, |

|

|

std::shared_ptr< llama_msgs::srv::GetMetadata::Response > | response ) |

|

private |

◆ goal_empty()

| bool LlamaNode::goal_empty |

( |

std::shared_ptr< const GenerateResponse::Goal > | goal | ) |

|

|

protectedvirtual |

◆ handle_accepted()

◆ handle_cancel()

◆ handle_goal()

| rclcpp_action::GoalResponse LlamaNode::handle_goal |

( |

const rclcpp_action::GoalUUID & | uuid, |

|

|

std::shared_ptr< const GenerateResponse::Goal > | goal ) |

|

private |

◆ list_loras_service_callback()

| void LlamaNode::list_loras_service_callback |

( |

const std::shared_ptr< llama_msgs::srv::ListLoRAs::Request > | request, |

|

|

std::shared_ptr< llama_msgs::srv::ListLoRAs::Response > | response ) |

|

private |

◆ on_activate()

| rclcpp_lifecycle::node_interfaces::LifecycleNodeInterface::CallbackReturn LlamaNode::on_activate |

( |

const rclcpp_lifecycle::State & | | ) |

|

◆ on_cleanup()

| rclcpp_lifecycle::node_interfaces::LifecycleNodeInterface::CallbackReturn LlamaNode::on_cleanup |

( |

const rclcpp_lifecycle::State & | | ) |

|

◆ on_configure()

| rclcpp_lifecycle::node_interfaces::LifecycleNodeInterface::CallbackReturn LlamaNode::on_configure |

( |

const rclcpp_lifecycle::State & | | ) |

|

◆ on_deactivate()

| rclcpp_lifecycle::node_interfaces::LifecycleNodeInterface::CallbackReturn LlamaNode::on_deactivate |

( |

const rclcpp_lifecycle::State & | | ) |

|

◆ on_shutdown()

| rclcpp_lifecycle::node_interfaces::LifecycleNodeInterface::CallbackReturn LlamaNode::on_shutdown |

( |

const rclcpp_lifecycle::State & | | ) |

|

◆ rerank_documents_service_callback()

| void LlamaNode::rerank_documents_service_callback |

( |

const std::shared_ptr< llama_msgs::srv::RerankDocuments::Request > | request, |

|

|

std::shared_ptr< llama_msgs::srv::RerankDocuments::Response > | response ) |

|

private |

◆ send_text()

◆ tokenize_service_callback()

| void LlamaNode::tokenize_service_callback |

( |

const std::shared_ptr< llama_msgs::srv::Tokenize::Request > | request, |

|

|

std::shared_ptr< llama_msgs::srv::Tokenize::Response > | response ) |

|

private |

◆ update_loras_service_callback()

| void LlamaNode::update_loras_service_callback |

( |

const std::shared_ptr< llama_msgs::srv::UpdateLoRAs::Request > | request, |

|

|

std::shared_ptr< llama_msgs::srv::UpdateLoRAs::Response > | response ) |

|

private |

◆ detokenize_service_

| rclcpp::Service<llama_msgs::srv::Detokenize>::SharedPtr llama_ros::LlamaNode::detokenize_service_ |

|

private |

◆ format_chat_service_

| rclcpp::Service<llama_msgs::srv::FormatChatMessages>::SharedPtr llama_ros::LlamaNode::format_chat_service_ |

|

private |

◆ generate_embeddings_service_

| rclcpp::Service<llama_msgs::srv::GenerateEmbeddings>::SharedPtr llama_ros::LlamaNode::generate_embeddings_service_ |

|

private |

◆ generate_response_action_server_

| rclcpp_action::Server<GenerateResponse>::SharedPtr llama_ros::LlamaNode::generate_response_action_server_ |

|

private |

◆ get_metadata_service_

| rclcpp::Service<llama_msgs::srv::GetMetadata>::SharedPtr llama_ros::LlamaNode::get_metadata_service_ |

|

private |

◆ goal_handle_

◆ list_loras_service_

| rclcpp::Service<llama_msgs::srv::ListLoRAs>::SharedPtr llama_ros::LlamaNode::list_loras_service_ |

|

private |

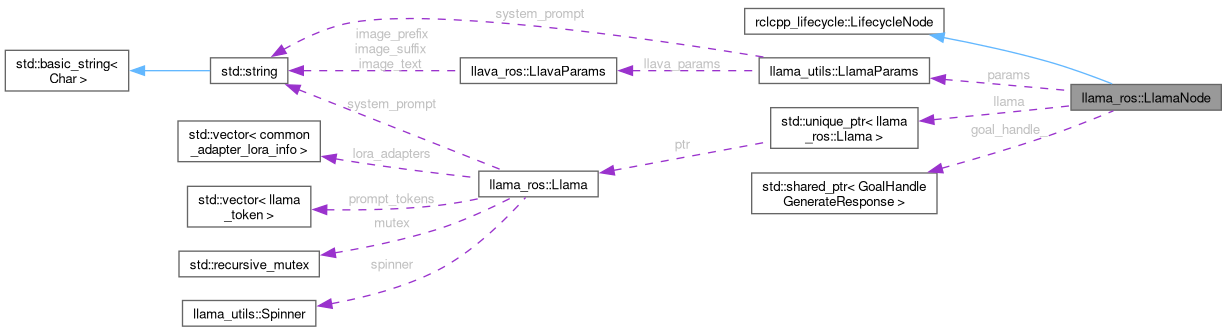

◆ llama

| std::unique_ptr<Llama> llama_ros::LlamaNode::llama |

|

protected |

◆ params

◆ params_declared

| bool llama_ros::LlamaNode::params_declared |

|

protected |

◆ rerank_documents_service_

| rclcpp::Service<llama_msgs::srv::RerankDocuments>::SharedPtr llama_ros::LlamaNode::rerank_documents_service_ |

|

private |

◆ tokenize_service_

| rclcpp::Service<llama_msgs::srv::Tokenize>::SharedPtr llama_ros::LlamaNode::tokenize_service_ |

|

private |

◆ update_loras_service_

| rclcpp::Service<llama_msgs::srv::UpdateLoRAs>::SharedPtr llama_ros::LlamaNode::update_loras_service_ |

|

private |

The documentation for this class was generated from the following files: