Public Member Functions |

Protected Member Functions |

Protected Attributes |

Private Attributes |

List of all members

llama_ros::Llama Class Reference

#include <llama.hpp>

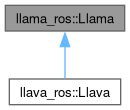

Inheritance diagram for llama_ros::Llama:

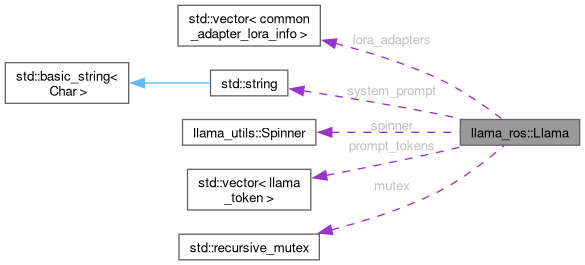

Collaboration diagram for llama_ros::Llama:

Public Member Functions | |

| Llama (const struct common_params ¶ms, std::string system_prompt="", bool initial_reset=true) | |

| virtual | ~Llama () |

| std::vector< llama_token > | tokenize (const std::string &text, bool add_bos, bool special=false) |

| std::string | detokenize (const std::vector< llama_token > &tokens) |

| virtual void | reset () |

| void | cancel () |

| std::string | format_chat_prompt (std::vector< struct common_chat_msg > chat_msgs, bool add_ass) |

| std::vector< struct LoRA > | list_loras () |

| void | update_loras (std::vector< struct LoRA > loras) |

| std::vector< llama_token > | truncate_tokens (const std::vector< llama_token > &tokens, int limit_size, bool add_eos=true) |

| struct EmbeddingsOuput | generate_embeddings (const std::string &input_prompt, int normalization=2) |

| struct EmbeddingsOuput | generate_embeddings (const std::vector< llama_token > &tokens, int normalization=2) |

| float | rank_document (const std::string &query, const std::string &document) |

| std::vector< float > | rank_documents (const std::string &query, const std::vector< std::string > &documents) |

| struct ResponseOutput | generate_response (const std::string &input_prompt, struct common_params_sampling sparams, GenerateResponseCallback callbakc=nullptr, std::vector< std::string > stop={}) |

| struct ResponseOutput | generate_response (const std::string &input_prompt, GenerateResponseCallback callbakc=nullptr, std::vector< std::string > stop={}) |

| const struct llama_context * | get_ctx () |

| const struct llama_model * | get_model () |

| const struct llama_vocab * | get_vocab () |

| int | get_n_ctx () |

| int | get_n_ctx_train () |

| int | get_n_embd () |

| int | get_n_vocab () |

| std::string | get_metadata (const std::string &key, size_t size) |

| std::string | get_metadata (const std::string &model_name, const std::string &key, size_t size) |

| int | get_int_metadata (const std::string &key, size_t size) |

| int | get_int_metadata (const std::string &model_name, const std::string &key, size_t size) |

| float | get_float_metadata (const std::string &key, size_t size) |

| float | get_float_metadata (const std::string &model_name, const std::string &key, size_t size) |

| struct Metadata | get_metadata () |

| bool | is_embedding () |

| bool | is_reranking () |

| bool | add_bos_token () |

| bool | is_eog () |

| llama_token | get_token_eos () |

| llama_token | get_token_bos () |

| llama_token | get_token_sep () |

Protected Member Functions | |

| virtual void | load_prompt (const std::string &input_prompt, bool add_pfx, bool add_sfx) |

| StopType | find_stop (std::vector< struct CompletionOutput > completion_result_list, std::vector< std::string > stopping_words) |

| StopType | find_stop_word (std::vector< struct CompletionOutput > completion_result_list, std::string stopping_word) |

| bool | eval_system_prompt () |

| virtual bool | eval_prompt () |

| bool | eval_prompt (std::vector< llama_token > prompt_tokens) |

| bool | eval_token (llama_token token) |

| bool | eval (std::vector< llama_token > tokens) |

| virtual bool | eval (struct llama_batch batch) |

| std::vector< struct TokenProb > | get_probs () |

| struct CompletionOutput | sample () |

Protected Attributes | |

| struct common_params | params |

| struct common_init_result | llama_init |

| struct llama_context * | ctx |

| struct llama_model * | model |

| std::vector< common_adapter_lora_info > | lora_adapters |

| struct common_sampler * | sampler |

| struct ggml_threadpool * | threadpool |

| struct ggml_threadpool * | threadpool_batch |

| std::string | system_prompt |

| bool | canceled |

| llama_utils::Spinner | spinner |

| std::vector< llama_token > | prompt_tokens |

| int32_t | n_past |

| int32_t | n_consumed |

| int32_t | ga_i |

Private Attributes | |

| std::recursive_mutex | mutex |

Constructor & Destructor Documentation

◆ Llama()

| Llama::Llama | ( | const struct common_params & | params, |

| std::string | system_prompt = "", | ||

| bool | initial_reset = true ) |

◆ ~Llama()

|

virtual |

Member Function Documentation

◆ add_bos_token()

|

inline |

◆ cancel()

| void Llama::cancel | ( | ) |

◆ detokenize()

| std::string Llama::detokenize | ( | const std::vector< llama_token > & | tokens | ) |

◆ eval() [1/2]

|

protected |

◆ eval() [2/2]

|

protectedvirtual |

Reimplemented in llava_ros::Llava.

◆ eval_prompt() [1/2]

|

protectedvirtual |

Reimplemented in llava_ros::Llava.

◆ eval_prompt() [2/2]

|

protected |

◆ eval_system_prompt()

|

protected |

◆ eval_token()

|

protected |

◆ find_stop()

|

protected |

◆ find_stop_word()

|

protected |

◆ format_chat_prompt()

| std::string Llama::format_chat_prompt | ( | std::vector< struct common_chat_msg > | chat_msgs, |

| bool | add_ass ) |

◆ generate_embeddings() [1/2]

| struct EmbeddingsOuput Llama::generate_embeddings | ( | const std::string & | input_prompt, |

| int | normalization = 2 ) |

◆ generate_embeddings() [2/2]

| struct EmbeddingsOuput Llama::generate_embeddings | ( | const std::vector< llama_token > & | tokens, |

| int | normalization = 2 ) |

◆ generate_response() [1/2]

| struct ResponseOutput Llama::generate_response | ( | const std::string & | input_prompt, |

| GenerateResponseCallback | callbakc = nullptr, | ||

| std::vector< std::string > | stop = {} ) |

◆ generate_response() [2/2]

| struct ResponseOutput Llama::generate_response | ( | const std::string & | input_prompt, |

| struct common_params_sampling | sparams, | ||

| GenerateResponseCallback | callbakc = nullptr, | ||

| std::vector< std::string > | stop = {} ) |

◆ get_ctx()

|

inline |

◆ get_float_metadata() [1/2]

| float Llama::get_float_metadata | ( | const std::string & | key, |

| size_t | size ) |

◆ get_float_metadata() [2/2]

| float Llama::get_float_metadata | ( | const std::string & | model_name, |

| const std::string & | key, | ||

| size_t | size ) |

◆ get_int_metadata() [1/2]

| int Llama::get_int_metadata | ( | const std::string & | key, |

| size_t | size ) |

◆ get_int_metadata() [2/2]

| int Llama::get_int_metadata | ( | const std::string & | model_name, |

| const std::string & | key, | ||

| size_t | size ) |

◆ get_metadata() [1/3]

| struct Metadata Llama::get_metadata | ( | ) |

◆ get_metadata() [2/3]

| std::string Llama::get_metadata | ( | const std::string & | key, |

| size_t | size ) |

◆ get_metadata() [3/3]

| std::string Llama::get_metadata | ( | const std::string & | model_name, |

| const std::string & | key, | ||

| size_t | size ) |

◆ get_model()

|

inline |

◆ get_n_ctx()

|

inline |

◆ get_n_ctx_train()

|

inline |

◆ get_n_embd()

|

inline |

◆ get_n_vocab()

|

inline |

◆ get_probs()

|

protected |

◆ get_token_bos()

|

inline |

◆ get_token_eos()

|

inline |

◆ get_token_sep()

|

inline |

◆ get_vocab()

|

inline |

◆ is_embedding()

|

inline |

◆ is_eog()

|

inline |

◆ is_reranking()

|

inline |

◆ list_loras()

| std::vector< struct LoRA > Llama::list_loras | ( | ) |

◆ load_prompt()

|

protectedvirtual |

Reimplemented in llava_ros::Llava.

◆ rank_document()

| float Llama::rank_document | ( | const std::string & | query, |

| const std::string & | document ) |

◆ rank_documents()

| std::vector< float > Llama::rank_documents | ( | const std::string & | query, |

| const std::vector< std::string > & | documents ) |

◆ reset()

|

virtual |

Reimplemented in llava_ros::Llava.

◆ sample()

|

protected |

◆ tokenize()

| std::vector< llama_token > Llama::tokenize | ( | const std::string & | text, |

| bool | add_bos, | ||

| bool | special = false ) |

◆ truncate_tokens()

| std::vector< llama_token > Llama::truncate_tokens | ( | const std::vector< llama_token > & | tokens, |

| int | limit_size, | ||

| bool | add_eos = true ) |

◆ update_loras()

| void Llama::update_loras | ( | std::vector< struct LoRA > | loras | ) |

Member Data Documentation

◆ canceled

|

protected |

◆ ctx

|

protected |

◆ ga_i

|

protected |

◆ llama_init

|

protected |

◆ lora_adapters

|

protected |

◆ model

|

protected |

◆ mutex

|

private |

◆ n_consumed

|

protected |

◆ n_past

|

protected |

◆ params

|

protected |

◆ prompt_tokens

|

protected |

◆ sampler

|

protected |

◆ spinner

|

protected |

◆ system_prompt

|

protected |

◆ threadpool

|

protected |

◆ threadpool_batch

|

protected |

The documentation for this class was generated from the following files:

Generated by