|

| Runnable[LanguageModelInput, BaseMessage] | bind_tools (self, Sequence[Union[Dict[str, Any], Type[BaseModel], Callable, BaseTool]] tools, *, Optional[Union[dict, str, Literal["auto", "all", "one", "any"], bool]] tool_choice="auto", Literal["function_calling", "json_schema", "json_mode"] method="function_calling", **Any kwargs) |

| |

| Runnable[LanguageModelInput, Union[Dict, BaseModel]] | with_structured_output (self, Optional[Union[Dict, Type[BaseModel], Type]] schema=None, *, bool include_raw=False, Literal["function_calling", "json_schema", "json_mode"] method="function_calling", **Any kwargs) |

| |

| Dict | validate_environment (cls, Dict values) |

| |

| None | cancel (self) |

| |

|

| Dict[str, Any] | _default_params (self) |

| |

| str | _llm_type (self) |

| |

| str | _generate_prompt (self, List[dict[str, str]] messages, **kwargs) |

| |

| List[Dict[str, str]] | _convert_content (self, Union[Dict[str, str], str, List[str], List[Dict[str, str]]] content) |

| |

| list[dict[str, str]] | _convert_message_to_dict (self, BaseMessage message) |

| |

| Tuple[Dict[str, str], Optional[str], Optional[str]] | _extract_data_from_messages (self, List[BaseMessage] messages) |

| |

| List[BaseMessage] | _create_chat_generations (self, GenerateResponse.Result response, str method) |

| |

| str | _generate (self, List[BaseMessage] messages, Optional[List[str]] stop=None, Optional[CallbackManagerForLLMRun] run_manager=None, **Any kwargs) |

| |

| Iterator[ChatGenerationChunk] | _stream (self, List[BaseMessage] messages, Optional[List[str]] stop=None, Optional[CallbackManagerForLLMRun] run_manager=None, **Any kwargs) |

| |

| GenerateResponse.Result | _create_action_goal (self, str prompt, Optional[List[str]] stop=None, Optional[str] image_url=None, Optional[np.ndarray] image=None, Optional[str] tools_grammar=None, **kwargs) |

| |

◆ _convert_content()

| List[Dict[str, str]] llama_ros.langchain.chat_llama_ros.ChatLlamaROS._convert_content |

( |

| self, |

|

|

Union[Dict[str, str], str, List[str], List[Dict[str, str]]]

| content ) |

|

protected |

◆ _convert_message_to_dict()

| list[dict[str, str]] llama_ros.langchain.chat_llama_ros.ChatLlamaROS._convert_message_to_dict |

( |

| self, |

|

|

BaseMessage | message ) |

|

protected |

◆ _create_chat_generations()

| List[BaseMessage] llama_ros.langchain.chat_llama_ros.ChatLlamaROS._create_chat_generations |

( |

| self, |

|

|

GenerateResponse.Result | response, |

|

|

str

| method ) |

|

protected |

◆ _default_params()

| Dict[str, Any] llama_ros.langchain.chat_llama_ros.ChatLlamaROS._default_params |

( |

| self | ) |

|

|

protected |

◆ _extract_data_from_messages()

| Tuple[Dict[str, str], Optional[str], Optional[str]] llama_ros.langchain.chat_llama_ros.ChatLlamaROS._extract_data_from_messages |

( |

| self, |

|

|

List[BaseMessage]

| messages ) |

|

protected |

◆ _generate()

| str llama_ros.langchain.chat_llama_ros.ChatLlamaROS._generate |

( |

| self, |

|

|

List[BaseMessage] | messages, |

|

|

Optional[List[str]] | stop = None, |

|

|

Optional[CallbackManagerForLLMRun] | run_manager = None, |

|

|

**Any | kwargs ) |

|

protected |

◆ _generate_prompt()

| str llama_ros.langchain.chat_llama_ros.ChatLlamaROS._generate_prompt |

( |

| self, |

|

|

List[dict[str, str]] | messages, |

|

|

** | kwargs ) |

|

protected |

◆ _llm_type()

| str llama_ros.langchain.chat_llama_ros.ChatLlamaROS._llm_type |

( |

| self | ) |

|

|

protected |

◆ _stream()

| Iterator[ChatGenerationChunk] llama_ros.langchain.chat_llama_ros.ChatLlamaROS._stream |

( |

| self, |

|

|

List[BaseMessage] | messages, |

|

|

Optional[List[str]] | stop = None, |

|

|

Optional[CallbackManagerForLLMRun] | run_manager = None, |

|

|

**Any | kwargs ) |

|

protected |

◆ bind_tools()

| Runnable[LanguageModelInput, BaseMessage] llama_ros.langchain.chat_llama_ros.ChatLlamaROS.bind_tools |

( |

| self, |

|

|

Sequence[Union[Dict[str, Any], Type[BaseModel], Callable, BaseTool]] | tools, |

|

|

* | , |

|

|

Optional[

Union[dict, str, Literal["auto", "all", "one", "any"], bool]

] | tool_choice = "auto", |

|

|

Literal[

"function_calling", "json_schema", "json_mode"

] | method = "function_calling", |

|

|

**Any | kwargs ) |

◆ with_structured_output()

| Runnable[LanguageModelInput, Union[Dict, BaseModel]] llama_ros.langchain.chat_llama_ros.ChatLlamaROS.with_structured_output |

( |

| self, |

|

|

Optional[Union[Dict, Type[BaseModel], Type]] | schema = None, |

|

|

* | , |

|

|

bool | include_raw = False, |

|

|

Literal[

"function_calling", "json_schema", "json_mode"

] | method = "function_calling", |

|

|

**Any | kwargs ) |

◆ jinja_env

| ImmutableSandboxedEnvironment llama_ros.langchain.chat_llama_ros.ChatLlamaROS.jinja_env |

|

static |

Initial value:= ImmutableSandboxedEnvironment(

loader=jinja2.BaseLoader(),

trim_blocks=True,

lstrip_blocks=True,

)

◆ use_default_template

| bool llama_ros.langchain.chat_llama_ros.ChatLlamaROS.use_default_template = False |

|

static |

◆ use_gguf_template

| bool llama_ros.langchain.chat_llama_ros.ChatLlamaROS.use_gguf_template = True |

|

static |

The documentation for this class was generated from the following file:

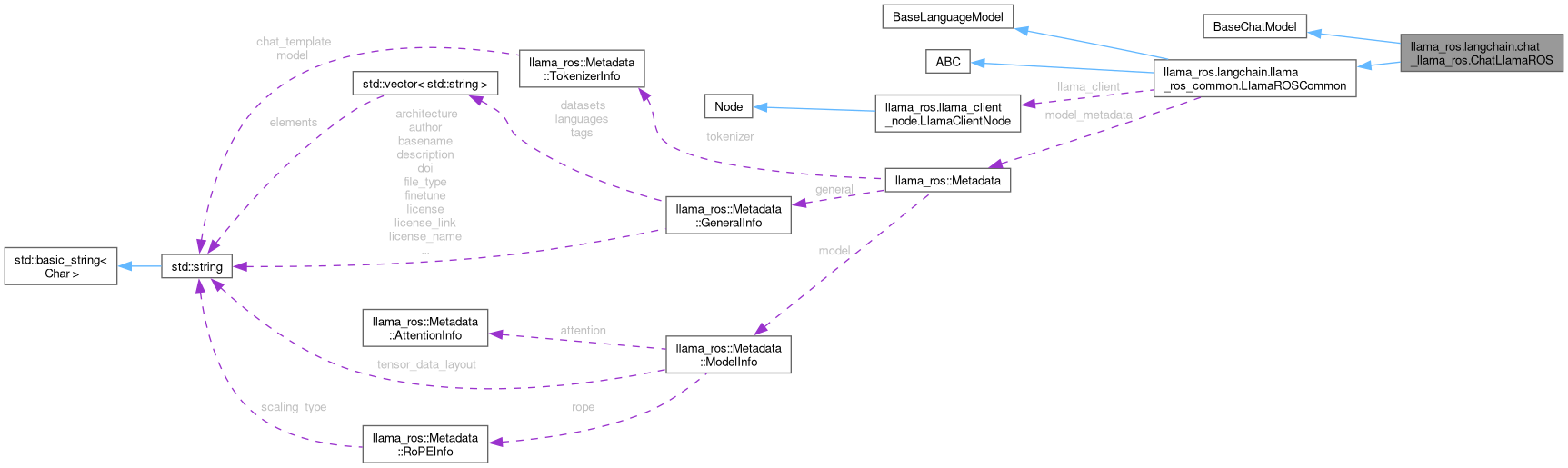

Public Member Functions inherited from llama_ros.langchain.llama_ros_common.LlamaROSCommon

Public Member Functions inherited from llama_ros.langchain.llama_ros_common.LlamaROSCommon Static Public Attributes inherited from llama_ros.langchain.llama_ros_common.LlamaROSCommon

Static Public Attributes inherited from llama_ros.langchain.llama_ros_common.LlamaROSCommon Protected Member Functions inherited from llama_ros.langchain.llama_ros_common.LlamaROSCommon

Protected Member Functions inherited from llama_ros.langchain.llama_ros_common.LlamaROSCommon