|

| Dict[str, Any] | _default_params (self) |

| |

| str | _llm_type (self) |

| |

| str | _call (self, str prompt, Optional[List[str]] stop=None, Optional[CallbackManagerForLLMRun] run_manager=None, **Any kwargs) |

| |

| Iterator[GenerationChunk] | _stream (self, str prompt, Optional[List[str]] stop=None, Optional[CallbackManagerForLLMRun] run_manager=None, **Any kwargs) |

| |

| GenerateResponse.Result | _create_action_goal (self, str prompt, Optional[List[str]] stop=None, Optional[str] image_url=None, Optional[np.ndarray] image=None, Optional[str] tools_grammar=None, **kwargs) |

| |

◆ _call()

| str llama_ros.langchain.llama_ros.LlamaROS._call |

( |

| self, |

|

|

str | prompt, |

|

|

Optional[List[str]] | stop = None, |

|

|

Optional[CallbackManagerForLLMRun] | run_manager = None, |

|

|

**Any | kwargs ) |

|

protected |

◆ _default_params()

| Dict[str, Any] llama_ros.langchain.llama_ros.LlamaROS._default_params |

( |

| self | ) |

|

|

protected |

◆ _llm_type()

| str llama_ros.langchain.llama_ros.LlamaROS._llm_type |

( |

| self | ) |

|

|

protected |

◆ _stream()

| Iterator[GenerationChunk] llama_ros.langchain.llama_ros.LlamaROS._stream |

( |

| self, |

|

|

str | prompt, |

|

|

Optional[List[str]] | stop = None, |

|

|

Optional[CallbackManagerForLLMRun] | run_manager = None, |

|

|

**Any | kwargs ) |

|

protected |

◆ get_num_tokens()

| int llama_ros.langchain.llama_ros.LlamaROS.get_num_tokens |

( |

| self, |

|

|

str | text ) |

The documentation for this class was generated from the following file:

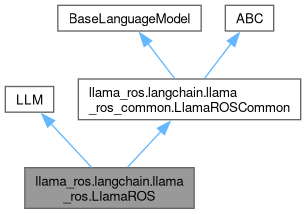

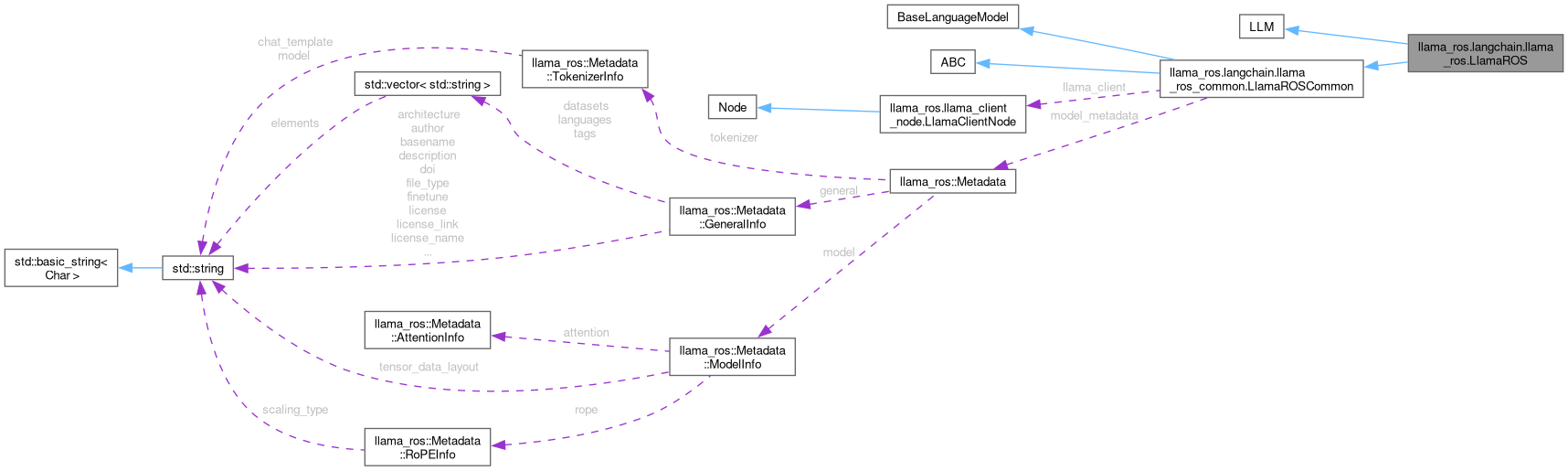

Public Member Functions inherited from llama_ros.langchain.llama_ros_common.LlamaROSCommon

Public Member Functions inherited from llama_ros.langchain.llama_ros_common.LlamaROSCommon Protected Member Functions inherited from llama_ros.langchain.llama_ros_common.LlamaROSCommon

Protected Member Functions inherited from llama_ros.langchain.llama_ros_common.LlamaROSCommon Static Public Attributes inherited from llama_ros.langchain.llama_ros_common.LlamaROSCommon

Static Public Attributes inherited from llama_ros.langchain.llama_ros_common.LlamaROSCommon